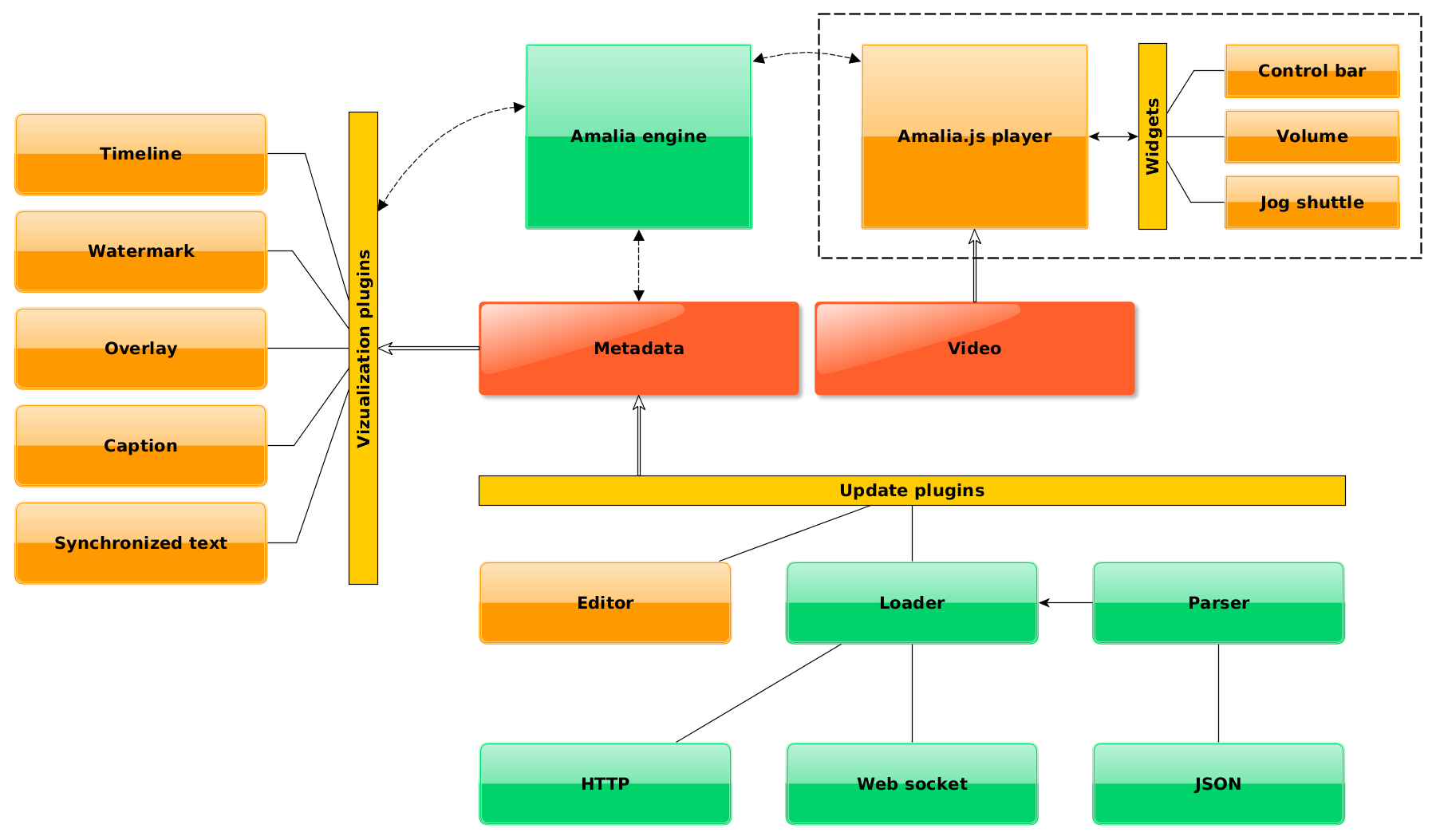

Global architecture

The player core component is a framework capable of providing real-time synchronization between the viewing window of the video and a set of plugins. As well as this core, each plugin has a set of parameters that define its behavior for a given application.

The main principle of amalia.js is to have a unified metadata model. Ensuring that all the metadata types are consistent and use the same standards will facilitate their use and enable us to design generic visualization plugins. A typical usage of amalia.js is to have a video file with a few metadata blocks. When instantiating the player, a binding has to be done between the metadata blocks and the visualization plugins so as to decide which metadata is displayed and in which way. It is possible to have a single metadata block bound with several visualization plugins, each one responsible for displaying a specific facet of the data.

Metadata format

There are two main types of metadata that the player will have to manage. We have on one side the metadata of media streams that are described in this section and on the other side, settings of the player, plugins and interactions with the applications that are discussed in the following sections. A generic metadata model has been designed, it is intended to represent all the metadata of a media stream. It is able to describe both the audio and video of a content, technical informations, any documentary notes and all the results of automatic metadata extraction algorithms. Currently, this model is only able to manage metadata of a single stream. For analysis results of a corpus, involving links between streams (clustering, mining, similarity detection ...), a specific data model extension has to be created and is currently investigated.

A full XML schema has been written to represent this metadata model. This schema has much more elements than what is strictly needed to display simple metadata with amalia.js. The current version is 0.2.5. We use this schema to generate Java classes using the Jaxb plugin for maven and Jackson to serialize the metadata stream in Json. This Java implementation is available in a separate project along with several helper methods through a general factory (see documentation below). This Json format is currently the only supported metadata format.

Each metadata block has a unique id that is used for the binding with the visualization plugins (see below). A metadata block should only represent a coherent and homogeneous bunch of data. If you need to display distinct types of data, or even several blocks of data of the same type (e.g. the output of different versions of the same algorithm), you should provide them in separate blocks of metadata.

The model is generic and extensible, it is able to represent any metadata that is :

- localized temporally in the stream : at a given timecode (TC) or during a specific time segment (TCIn, TCOut)

- localized spatially in a video frame : currently ellipses and rectangles bounding boxes are available

- hierarchical : each metadata is associated to a specific level (TCLevel)

- labeled : each metadata may have a label

- scored : each metadata may have a confidence score

- extended : each metadata can have its own additionnal data that is not represented in this model

The timecode representation in our format, for

tc

,

tcin

and

tcout

fields must match the following regular expression :

[0-9]{2}:[0-9]{2}:[0-9]{2}.[0-9]{4} (hh:mm:ss.SSSS)

. Using 4 digits for the milliseconds field is usefull when dealing with precise temporal metadata,

especially with audio description.

The only mandatory field of a metadata block is its

id

. All the other fields are, for the moment, only here for information purposes. In the near future, the

content of the

type

field will be normalized.

All the temporal metadata are in the main

localisation

field. Each localisation has its temporal information represented either as a single timecode (using the

tc

field) or as a time segment (with

tcin

and

tcout

timecodes provided). The hierarchical structure of the temporally localized metadata is represented by

nested

localisation

blocks with sublocalisations. As a full pyramid of levels is not mandatory, and also to improve

parsing performances, the level of a

localisation

is also explicitly provided with a

tclevel

field.

The model will be fully documented later. If you need to add specific informations that are not yet managed

in our model, you can still use the

data

field to store any Json block with your own structure and access it in your plugins.

In the following example, we have a simple metadata block for a 1 minute video. The id of this block, that

should be used to bind it to a visualization plugin, is

amalia-simple01

. It contains a single data, at 30 seconds, which has a label.

{

"id":"amalia-simple01",

"type":"simple",

"algorithm":"demo-json-generator",

"processor":"Ina Research Department - N. HERVE",

"processed":1418900533632,

"version":1,

"localisation":[

{

"type":"simple",

"tcin":"00:00:00.0000",

"tcout":"00:01:00.0000",

"tclevel":0,

"sublocalisations":{

"localisation":[

{

"label":"A demo label !",

"tc":"00:00:30.0000",

"tclevel":1

}

]

}

}

]

}

You may also have a look at the Json files used in the following examples : cue points, segments, text and overlay.

Textual metadata

Textual metadata can be displayed by two specific plugins : caption

and text synchronization.

Depending on the textual metadata you have, it may be usefull to exploit its hierarchical structure.

Typically, one may choose to have the words, sentences and paragraphs of a text temporally localized.

In such a case, one would choose a specific tclevel for each level of text. When binding a text

metadata block with one of the text visualization plugin, one has to choose which level will be displayed.

Spatial metadata

The overlay plugin is able to display spatial

localisation of the metadata.

Currently, we manage only rectangle and ellipse bounding boxes. All the coordinate informations

(x, y, w, h, ...) are real values between 0 and 1.

They are normalized to the media size.

The orientation o is a real value between -Π and Π.

In order to track an object, you need to have a localisation in your metadata with the correct

duration (tcin and tcout fields).

Then you add a spatials block to this localisation. Each specific track is

represented by a spatial block.

In this spatial, you will only have the key points of your object trajectory with the specific

timecode (tc).

Between these points, the position of the bounding box is linearly interpolated by the overlay plugin (in

fact, currently, by the Raphaël library).

You can have a look at the example Json file of

the overlay plugin for more details.

Metadata binding

You can either have static binding where you explicitly associated a metadata block to a plugin or dynamic binding where these associations is based on the metadata types. If you provide a metadata block id to the plugin, you activate the static binding. If you don't provide any, that dynamic binding is activated. The following table lists the automatic bindings between metadata types and vizualisation plugins :

| Type | Description | Key |

|---|---|---|

| DEFAULT | fr.ina.amalia.player.PluginBindingManager.dataTypes.DEFAULT | |

| DETECTION | Is mapped with timeline plugin in mode cue point. | fr.ina.amalia.player.PluginBindingManager.dataTypes.DETECTION |

| VISUAL_DETECTION | Is mapped with timeline plugin in mode visual component and plugin overlay | fr.ina.amalia.player.PluginBindingManager.dataTypes.VISUAL_DETECTION |

| VISUAL_TRACKING | Is mapped with timeline plugin in mode visual component and plugin overlay | fr.ina.amalia.player.PluginBindingManager.dataTypes.VISUAL_TRACKING |

| SEGMENTATION | Is mapped with timeline plugin in mode segment | fr.ina.amalia.player.PluginBindingManager.dataTypes.SEGMENTATION |

| AUDIO_SEGMENTATION | Is mapped with timeline plugin in mode segment | fr.ina.amalia.player.PluginBindingManager.dataTypes.AUDIO_SEGMENTATION |

| TRANSCRIPTION | Is mapped with Text synchronization plugin and caption plugin | fr.ina.amalia.player.PluginBindingManager.dataTypes.TRANSCRIPTION |

| SYNCHRONIZED_TEXT | Is mapped with Text synchronization plugin and caption plugin | fr.ina.amalia.player.PluginBindingManager.dataTypes.SYNCHRONIZED_TEXT |

| KEYFRAMES | Is mapped with timeline plugin in mode image | fr.ina.amalia.player.PluginBindingManager.dataTypes.KEYFRAMES |

| HISTOGRAM | Is mapped with timeline plugin in mode histogram component. | fr.ina.amalia.player.PluginBindingManager.dataTypes.HISTOGRAM |

Contact

For any information, you can contact us at

Institut National de l'Audiovisuel - INA

Institut National de l'Audiovisuel - INA